Introduction

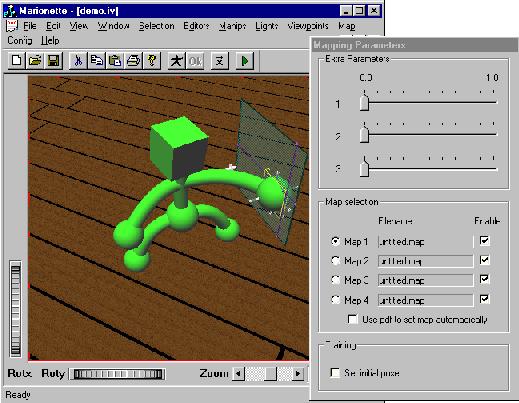

The Marionette is a simple performance animation system that

allows the user to explore a variety of ways to map the output of

motion capture devices (such as the Polhemus FastTrak or

InsideTrak) to the pose of a graphical character.

My goal with the Marionette is to explore these issues:

- How should the mapping from the sensor data to the character be

specified? This gets interesting when the mapping is

nonliteral, as in for example, when you want to

animate a Luxo lamp, or in the case of the Marionette, the

sensors have fewer degrees of freedom than the character.

- How can very simple pattern recognition techniques be used to

overcome the problem (opportunity?) of having very few input

degrees of freedom?

- What kind of funky, nonliteral maps can you design with such a

system? Is it possible to get interesting cartoony effects, for

example, with a small set of maps that the user trains up

quickly?

More later.

|